ShellCheck is a static analysis tool that

points out common problems and pitfalls in shell scripts.

As of last weekend it appears to have become GitHub’s most starred Haskell

repository,

after a mention in MIT SIPB’s Writing Safe Shell

Scripts guide.

While obviously a frivolous metric in a niche category, I like to interpret this

as meaning that people are finding ShellCheck as useful as I find

Pandoc, the excellent universal document converter I use

for notes, blog posts and ShellCheck’s man page, and which held a firm grip on

the top spot for a long time.

I am very happy and humbled that so many people are finding the project helpful

and useful. The response has been incredibly and overwhelmingly positive.

Several times per week I see mentions from people who tried it out, and it

either solved their immediate problem, or it taught them something new and

interesting they didn’t know before.

I started the project 8 years ago, and this seems like a good opportunity to

share some of the lessons learned along the way.

Quick History

ShellCheck is generally considered a shell script linter, but it actually

started life in 2012 as an IRC bot (of all things!) on #bash@Freenode. It’s

still there and as active as ever.

The channel is the home of the comprehensive and frequently cited Wooledge

BashFAQ, plus an additional list of

common pitfalls. Between them, they

currently cover 178 common questions about Bash and POSIX sh.

Since no one ever reads the FAQ, an existing bot allowed regulars to e.g.

answer any problem regarding variations of for file in `ls` with a simple !pf 1, and

let a bot point the person in the right direction (the IRC equivalent of

StackOverflow’s "duplicate of").

ShellCheck’s original purpose was essentially to find out how many of these

FAQs could be classified automatically, without any human input.

Due to this, ShellCheck was designed for different goals than most linters.

- It would only run on buggy scripts, because otherwise they wouldn’t have been posted.

- It would only run once, and should be as helpful as possible on the first pass.

- It would run on my machine, not on arbitrary user’s systems.

This will become relevant.

On Haskell

Since ShellCheck was a hobby project that wasn’t intended to run on random

people’s machines, I could completely ignore popularity, familiarity, and

practicality, and pick the language that was the most fun and interesting.

That was, of course, Haskell.

As anyone who looks at code will quickly conclude, ShellCheck was my first real

project in the language.

Some things worked really well:

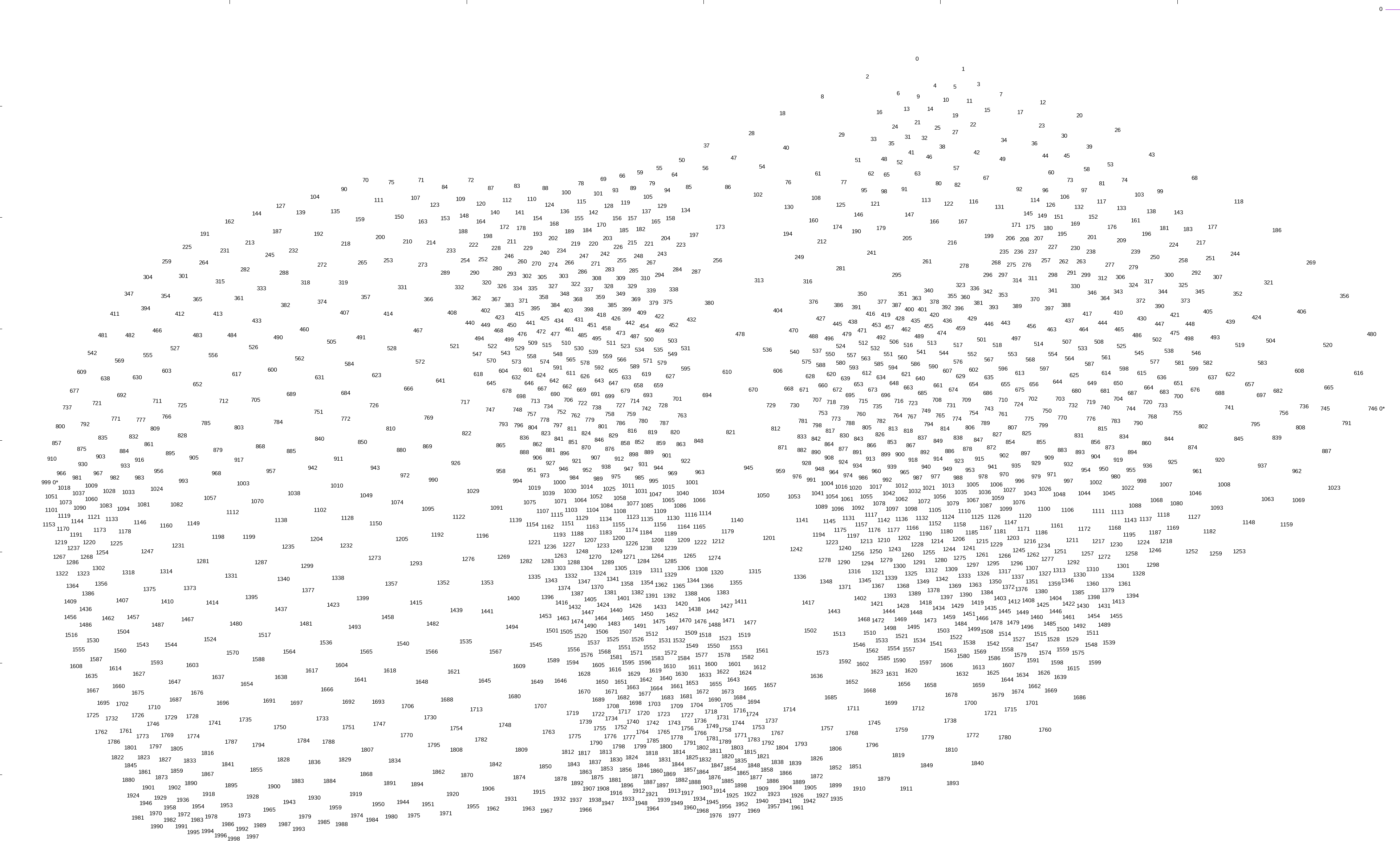

- QuickCheck has been beyond amazing. ShellCheck has 1500 unit tests just because they’re incredibly quick and convenient to write. It’s so good that I’m adding a subsection for it.

- Parsec is a joy to write parsers in. Initially I dreaded e.g. implementing backticks because they require recursively re-invoking the parser on escaped string data, but every time I faced such issues, they turned out to be much easier than expected.

- Haskell itself is a very comfy, productive language to write. It’s not at all arcane or restrictive as first impressions might have you believe. I’d take it over Java or C++ for most things.

- Haskell is surprisingly portable. I was shocked when I discovered that people were running ShellCheck natively on Windows without problems. ARM required a few code changes at the time, but wouldn’t have today.

Some things didn’t work as well:

- Haskell has an undeniably high barrier to entry for the uninitiated, and ShellCheck’s target audience is not Haskell developers. I think this has seriously limited the scope and number of contributions.

- It’s easier to write than to run: it’s been hard to predict and control runtime performance. For example, many of ShellCheck’s check functions take an explicit "params" argument. Converting them to a cleaner ReaderT led to a 10% total run time regression, so I had to revert it. It makes me wonder about the speed penalty of code I designed better to begin with.

- Controlling memory usage is also hard. I dropped multithreading support because I simply couldn’t figure out the space leaks.

- For people not already invested in the ecosystem, the runtime dependencies can be 100MB+. ShellCheck is available as a standalone ~8MB executable, which took some work and is still comparatively large.

- The Haskell ecosystem moves and breaks very quickly. New changes would frequently break on older platform versions. Testing the default platform version of mainstream distros in VMs was slow and tedious. Fortunately, Docker came along to make it easy to automate per-distro testing, and Stack brought reproducible Haskell builds.

If starting a new developer tooling project for a mainstream audience, I might

choose a more mainstream language. I’d also put serious consideration into how

well the language runs on a JSVM, since (love it or hate it) this would solve

a lot of distribution, integration, and portability issues.

ShellCheck’s API is not very cohesive. If starting a new project in Haskell

today, I would start out by implementing a dozen business logic functions in

every part of the system in my best case pseudocode. This would help me figure

out the kind of DSL I want, and help me develop a more uniform API on a

suitable stack of monads.

Unit testing made fun and easy

ShellCheck is ~10k LoC, but has an additional 1.5k unit tests. I’m not a unit testing evangelist, 100% completionist or TDD fanatic: this simply happened by itself because writing tests was so quick and easy. Here’s an example check:

prop_checkSourceArgs1 = verify checkSourceArgs "#!/bin/sh\n. script arg"

prop_checkSourceArgs2 = verifyNot checkSourceArgs "#!/bin/sh\n. script"

prop_checkSourceArgs3 = verifyNot checkSourceArgs "#!/bin/bash\n. script arg"

checkSourceArgs = CommandCheck (Exactly ".") f

where

f t = whenShell [Sh, Dash] $

case arguments t of

(file:arg1:_) -> warn (getId arg1) 2240 $

"The dot command does not support arguments in sh/dash. Set them as variables."

_ -> return ()

The prop_.. lines are individual unit tests. Note in particular that:

- Each test simply specifies whether the given check emits a warning for a snippet. The boilerplate fits on the same line

- The test is in the same file and place as the function, so it doesn’t require any cross-referencing

- It doubles as a doc comment that explains what the function is expected to trigger on

checkSourceArgs is in OO terms an unexposed private method, but no OO BS was required to "expose it for testing"

Even the parser has tests like this, where it can check whether a given

function parses the given string cleanly or with warnings.

QuickCheck is better known for its ability to generate test cases for

invariants, which ShellCheck makes some minimal use of, but even without that

I’ve never had a better test writing experience in any previous project of any

language.

On writing a parser

ShellCheck was the first real parser I ever wrote. I’ve since taken up a day

job as a compiler engineer, which helps to put a lot of it into perspective.

My most important lessons would be:

- Be careful if your parser framework makes it too easy to backtrack. Look up good parser design. I naively wrote a character based parser function for each construct like

${..}, $(..), $'..', etc, and now the parser has to backtrack a dozen times to try every possibility when it hits a $. With a tokenizer or a parser that read $ followed by {..}, (..) etc, it would have been much faster — in fact, just grouping all the $ constructs behind a lookahead decreased total checking time by 10%.

- Consistently use a tab stop of 1 for all column counts. This is what e.g. GCC does, and it makes life easier for everyone involved. ShellCheck used Parsec’s default of 8, which has been a source of alignment bugs and unnecessary conversions ever since.

- Record the full span and not just the start index of your tokens. Everyone loves squiggly lines under bad code. Also consider whether you want to capture comments and whitespace so you can turn the AST back into a script for autofixing. ShellCheck retrofitted end positions and emits autofixes as a series of text edits based on token spans, and it’s neither robust nor convenient.

ShellCheck’s parser has historically also been, let’s say, "pragmatic". For example, shell scripts are case sensitive, but ShellCheck accepted While in place of while for loops.

This is generally considered heresy, but it originally made sense when ShellCheck needed to be as helpful as possible on the first try for a known buggy script. Neither ShellCheck nor a human would not point out that While sleep 1; do date; done has a misplaced do and done, but most linters would since While is not considered a valid start of a loop.

These days it not as useful, since any spurious warnings about do would disappear when the user fixed the warning for While and reran ShellCheck.

It also gets in the way for advanced users who e.g. write a function called While and capitalized it that way because they don’t want it treated as a shell keyword. ShellCheck has rightly received some critisism for focusing too much on newbie mistakes at the expense of noise for advanced users. This is an active area of development.

If designed again, ShellCheck would parse more strictly according to spec, and instead make liberal use of lookaheads with pragmatic interpretations to emit warnings, even if it often resulted in a single useful warning at a time.

On writing a static analysis tool

I hadn’t really pondered, didn’t really use, and definitely hadn’t written any

static analysis or linting tools before. The first versions of ShellCheck

didn’t even have error codes, just plain English text befitting an IRC bot.

- Supplement terse warnings with a wiki/web page. ShellCheck’s wiki has a page for each warning, like SC2162. It has an example of code that triggers, an example of a fix, a mention of cases where it may not apply, and has especially received a lot of praise for having an educational rationale explaining why this is worth fixing.

- You’re not treading new ground. There are well studied algorithms for whatever you want to do. ShellCheck has some simplistic ad-hoc algorithms for e.g. variable liveness, which could and should have been implemented using robust and well known techniques.

- If designed today with 20/20 hindsight, ShellCheck would have a plan to work with (or as) a Language Server to help with editor integrations.

- Include simple ways to suppress warnings. Since ShellCheck was originally intended for one-shot scenarios, this was an afterthought. It was then added on a statement level where the idea was that you could put special comments in front of a function, loop, or regular command, and it would apply to the entire thing. This has been an endless source of confusion (why can’t you put it in front of a

case branch?), and should have been done on a per-line level instead.

- Give tests metadata so you can filter them. ShellCheck’s original checks were simple functions invoked for each AST node. Some of them only applied to certain shells, but would still be invoked thousands of times just to check that they don’t apply and return. Command specific checks would all duplicate and repeat the work of determining whether the current node was a command, and whether it was the command. Disabled checks were all still run, and their hard work simply filtered out afterwards. With more metadata, these could have been more intelligently applied.

- Gather a test corpus! Examining the diff between runs on a few thousand scripts has been invaluable in evaluating the potential, true/false positive rate, and general correctness of checks.

- Track performance. I simply added

time output to the aforementioned diff, and it stopped several space leaks and quadratic explosions.

For a test corpus, I set up one script to scrape pastebin links from #bash@Freenode, and another to scrape scripts from trending GitHub projects.

The pastebin links were more helpful because they exactly represented the types of scripts that ShellCheck wanted to check. However, though they’re generally simple and posted publically, I don’t actually have any rights to redistribute them, so I can’t really publish them to allow people to test their contributions.

The GitHub scripts are easier to redistribute since there’s provenance and semi-structured licensing terms, but they’re generally also less buggy and therefore less useful (except for finding false positives).

Today I would probably have tried parsing the Stack Exchange Data Dump instead.

Finally, ShellCheck is generally reluctant to read arbitrary files (e.g. requiring a

flag -x to follow included scripts). This is obviously because it was

first a hosted service on IRC and web before containerization was made simple,

and not because this is in any way helpful or useful for a local linter.

On having a side project while working at a large company

I worked at Google when I started ShellCheck. They were good sports about

it, let me run the project and keep the copyright, as long as I kept it

entirely separate from my day job. I later joined Facebook, where the policies

were the same.

Both companies independently discovered and adopted ShellCheck without my

input, and the lawyers stressed the same points:

- The company must not get, or appear to get, any special treatment because of you working there. For example, don’t prioritize bugs they find.

- Don’t contribute to anything related to the project internally. Not even if it’s work related. Not even if it’s not. Not even on your own time.

- If anyone assigns you a related internal task/bug, reject it and tell them they’ll have to submit a FOSS bug report.

And after discovering late in the interview process that Apple has a blanket ban on all programming related hobby projects:

- Ask any potential new employer about their side project policy early on

On the name "ShellCheck"

I just thought it was a descriptive name with a cute pun. I had no idea that a

portion of the population would consistently read "SpellCheck" no matter

how many times they saw it. Sorry for the confusion!